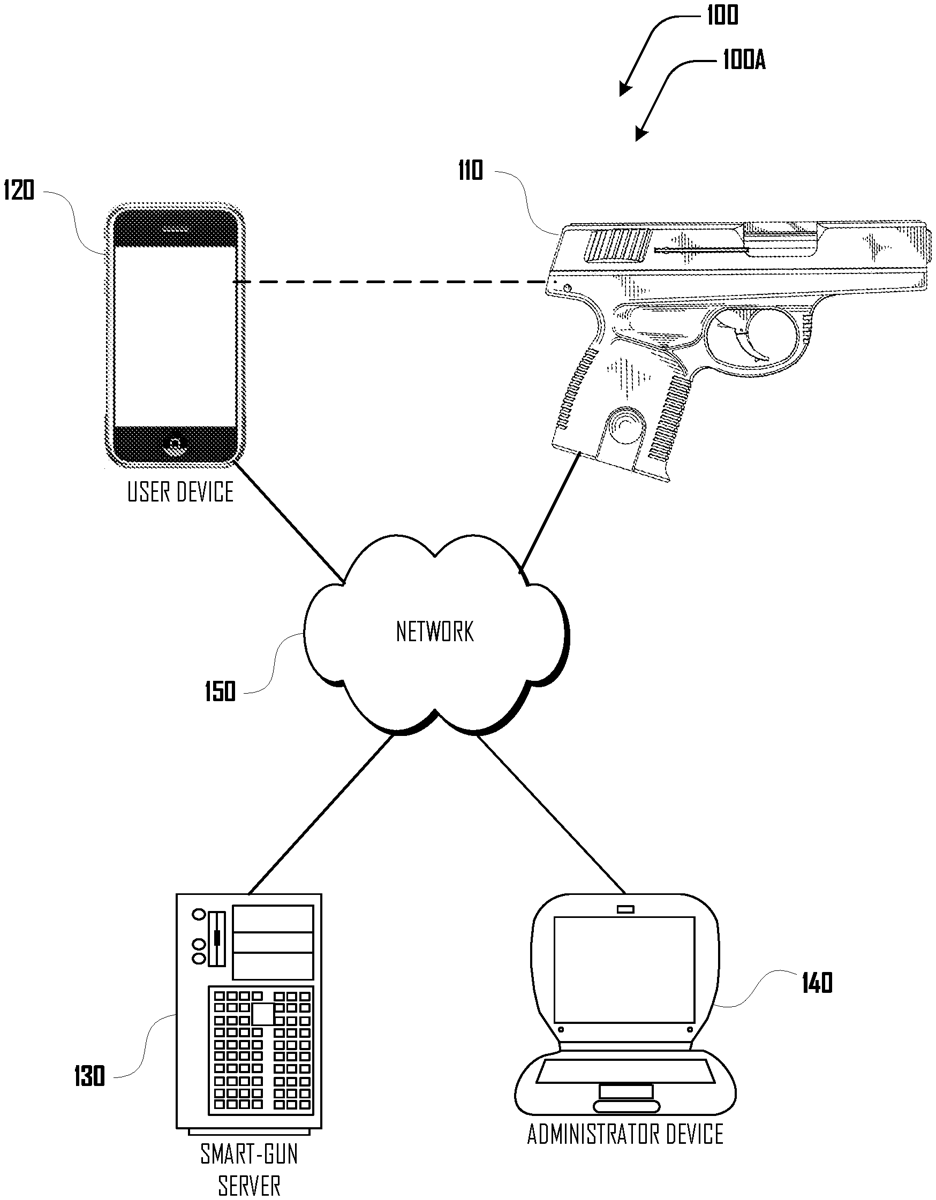

US12467704 - Smart-gun artificial intelligence systems and methods

The patent describes a smart-gun system that utilizes artificial intelligence to assess the states of both the firearm and its user, determining real-time safety levels and automatically adjusting the gun’s configuration, such as engaging the safety. Additionally, the system generates and presents conversation statements through a synthesized audio interface based on the assessed states, enhancing user interaction and safety awareness.

Claim 1

1 . A computer-implemented method of a smart-gun system, the method comprising: obtaining a set of smart-gun data comprising: smart-gun orientation data that indicates an orientation of a smart-gun being handled by a user, the smart-gun including a barrel, a safety, and a firing chamber, smart-gun configuration data that indicates a plurality of configurations of the smart-gun, including safety on/off, firing configuration, and ammunition configuration, and smart-gun audio data including an audio recording from a microphone of the smart-gun, determining, using artificial intelligence, one or more states of the smart-gun based at least in part on the set of smart-gun data; determining, using artificial intelligence, one or more states of the user based at least in part on the set of smart-gun data; determining a real-time safety level based at least in part on the one or more states of the smart-gun and the one or more states of the user; determining a smart-gun configuration change based at least in part on the real-time safety level, the one or more states of the smart-gun and the one or more states of the user, the smart-gun configuration change including putting the smart-gun on safety or otherwise making the smart-gun inoperable to fire; implementing the smart-gun configuration change automatically without human intervention, including by the user handling the smart-gun, the implementing the smart-gun configuration change including actuating the smart-gun to put the smart-gun on safety or otherwise making the smart-gun inoperable to fire; determining to generate a conversation statement based at least in part on the real-time safety level, the one or more states of the smart-gun and the one or more states of the user; generating, in response to the determining to generate the conversation statement, an LLM prompt based at least in part on the real-time safety level, the one or more states of the smart-gun and the one or more states of the user; submitting the LLM prompt to a remote LLM system; obtaining a conversation statement from the remote LLM system in response to the LLM prompt; and presenting the conversation statement via an interface of the smart-gun that includes at least a microphone, the conversation statement presented as a synthesized audio speaking voice having a persona or character. obtaining a set of smart-gun data comprising: smart-gun orientation data that indicates an orientation of a smart-gun being handled by a user, the smart-gun including a barrel, a safety, and a firing chamber, smart-gun configuration data that indicates a plurality of configurations of the smart-gun, including safety on/off, firing configuration, and ammunition configuration, and smart-gun audio data including an audio recording from a microphone of the smart-gun, smart-gun orientation data that indicates an orientation of a smart-gun being handled by a user, the smart-gun including a barrel, a safety, and a firing chamber, smart-gun configuration data that indicates a plurality of configurations of the smart-gun, including safety on/off, firing configuration, and ammunition configuration, and smart-gun audio data including an audio recording from a microphone of the smart-gun, determining, using artificial intelligence, one or more states of the smart-gun based at least in part on the set of smart-gun data; determining, using artificial intelligence, one or more states of the user based at least in part on the set of smart-gun data; determining a real-time safety level based at least in part on the one or more states of the smart-gun and the one or more states of the user; determining a smart-gun configuration change based at least in part on the real-time safety level, the one or more states of the smart-gun and the one or more states of the user, the smart-gun configuration change including putting the smart-gun on safety or otherwise making the smart-gun inoperable to fire; implementing the smart-gun configuration change automatically without human intervention, including by the user handling the smart-gun, the implementing the smart-gun configuration change including actuating the smart-gun to put the smart-gun on safety or otherwise making the smart-gun inoperable to fire; determining to generate a conversation statement based at least in part on the real-time safety level, the one or more states of the smart-gun and the one or more states of the user; generating, in response to the determining to generate the conversation statement, an LLM prompt based at least in part on the real-time safety level, the one or more states of the smart-gun and the one or more states of the user; submitting the LLM prompt to a remote LLM system; obtaining a conversation statement from the remote LLM system in response to the LLM prompt; and presenting the conversation statement via an interface of the smart-gun that includes at least a microphone, the conversation statement presented as a synthesized audio speaking voice having a persona or character.

Google Patents

https://patents.google.com/patent/US12467704

USPTO PDF

https://image-ppubs.uspto.gov/dirsearch-public/print/downloadPdf/12467704